Advertisement

Creating a dataset is often the first task in any project that uses machine learning, data analysis, or automation. While downloading existing datasets is convenient, there are situations where custom data is a better fit. Maybe you're solving a problem that hasn’t been tackled before, or you just want more control over what’s going into your model. Building your own dataset gives you that freedom. Python, with its wide range of tools and libraries, makes this process less intimidating than it sounds. Here are six reliable ways to create datasets from scratch using Python, each suited for different kinds of data needs.

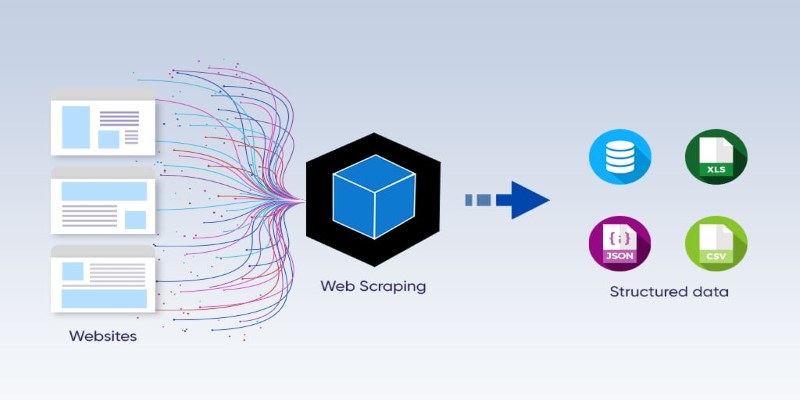

If the data you require is already online, you can create a script to retrieve it for you automatically. Python requests and BeautifulSoup libraries are suitable for static pages, whereas Selenium is more appropriate for sites that load material dynamically via JavaScript.

You start by identifying the structure of the page—HTML tags, classes, or IDs that hold the data. Once you understand where the data lives, you can use BeautifulSoup to parse it. With requests.get(), you can fetch the page’s source and extract the relevant sections. If buttons, dropdowns, or pagination are involved, Selenium can simulate clicks and scrolls.

It's useful when you're trying to gather text, prices, ratings, images, or articles. However, scraping large amounts of data might require handling CAPTCHAs, setting delays between requests, or rotating proxies.

APIs are made for structured data exchange. If the source you’re targeting offers an API, use it. Most platforms—like Twitter, Reddit, GitHub, or financial sites—have endpoints that return data in JSON format.

You can access these endpoints using the requests module. For example, calling requests.get(url) returns a response that you can parse with .json(). This way, you don’t have to worry about parsing HTML or handling broken layouts.

Each API comes with its own rate limits and authentication methods. Some use API keys in the header, others rely on OAuth. Once authenticated, you send GET or POST requests and collect the returned data in a list or dictionary.

If you plan to fetch data regularly, you can automate the process using cron jobs or schedule it in Python. APIs are the cleanest way to build datasets with structured fields like timestamps, locations, and categories.

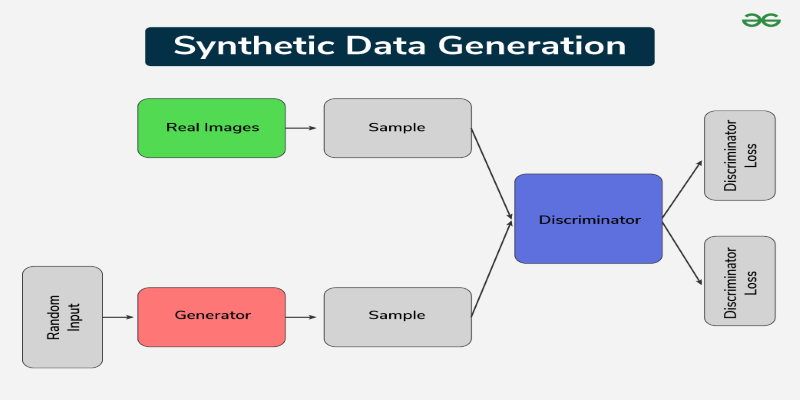

Sometimes you don't need real-world data—just something that resembles it. Whether you’re testing an algorithm or trying to build a proof of concept, synthetic data can be a practical choice.

Python's random, faker, and numpy libraries can be used for this. random helps in generating simple values like numbers, strings, or choices from a list. numpy is better for numerical arrays or more complex distributions like normal, Poisson, or binomial. If you're trying to simulate people, companies, or addresses, faker generates names, emails, phone numbers, and even job titles.

With these tools, you can define rules and boundaries for your data. For example, simulate customer records with names, ages between 18 and 65, purchase amounts, and fake addresses. You can set correlations too, like ensuring that higher income values align with higher spending.

This method is ideal when privacy is a concern or when real-world examples are hard to come by.

Sometimes data is already sitting on your computer, just not in the format you need. You can extract data from PDFs, Word documents, Excel sheets, or plain text files using various libraries.

To handle Excel files, pandas.read_excel() works with both .xls and .xlsx. For CSV files, pandas.read_csv() does the job. For PDFs, PyPDF2, pdfplumber, or pdfminer can help pull out the text. Word documents are manageable using python-docx.

Let’s say you have 100 PDFs with financial tables. With pdfplumber, you can loop through each file, locate the relevant section, and pull it into a structured DataFrame.

If the files are not organized consistently, you may need regular expressions to match patterns or string manipulation tools to clean up the noise.

This method works best when working with internal records, scanned reports, or any documents you've already collected.

If your task is classification, detection, or segmentation, you often need labeled data. You can’t always find ready-made datasets with the exact categories you're after. This means you’ll need to create your own.

For text, you can store sentences or documents in a list, and then manually tag each with a label, like "positive", "negative", or "neutral". You can use Python scripts with simple command-line prompts or even build a basic GUI using tkinter.

For image labeling, tools like labelImg, labelme, or makesense.ai allow you to draw bounding boxes or masks. Once labeled, the files are saved as XML, JSON, or CSV, depending on the tool. These can be read in Python and converted into usable datasets for model training.

Although manual labeling is time-consuming, it gives you complete control over the quality and relevance of the data.

At times, one source doesn’t cover everything. You may have to piece together information from several places to build a dataset that suits your needs.

Python makes it easy to merge different files or data streams. For structured data, pandas.merge() or concat() allows you to join tables based on common fields. For unstructured data like logs, you can write scripts to clean and unify the format before saving them.

Imagine you’re building a dataset of restaurants. One site gives you addresses, another gives customer reviews, and a third lists menu items. You can scrape or extract each part separately, then merge them using a unique identifier like the business name or location.

This is useful when each source offers only part of the picture and you want a dataset that’s rich, detailed, and accurate.

Creating a dataset in Python doesn’t follow a single formula. The method you choose depends on what kind of data you need and where it's coming from. Whether you’re scraping websites, pulling data from APIs, generating synthetic examples, labeling content manually, or combining bits and pieces from various places, Python gives you the tools to do it cleanly and efficiently. Each method has its own learning curve, but once you’ve built your own dataset, you get something far more valuable than just rows and columns—it’s data that actually fits your purpose.

Advertisement

Ask QX by QX Lab AI is a multilingual GenAI platform designed to support over 100 languages, offering accessible AI tools for users around the world

Learn how the zip() function in Python works with detailed examples. Discover how to combine lists in Python, unzip data, and sort paired items using clean, readable code

Learn how to convert strings to JSON objects using tools like JSON.parse(), json.loads(), JsonConvert, and more across 11 popular programming languages

Learn how AI parameters impact model performance and how to optimize them for better accuracy, efficiency, and generalization

Stay informed with the best AI news websites. Explore trusted platforms that offer real-time AI updates, research highlights, and expert insights without the noise

Learn about Inception Score (IS): how it evaluates GANs and generative AI quality via image diversity, clarity, and more.

How to create an AI voice cover using Covers.ai with this simple step-by-step guide. From uploading audio to selecting a voice and exporting your track, everything is covered in detail

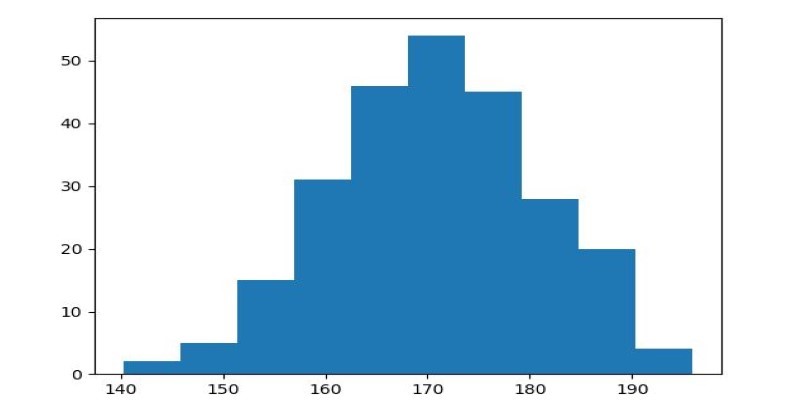

How to use Matplotlib.pyplot.hist() in Python to create clean, customizable histograms. This guide explains bins, styles, and tips for better Python histogram plots

Looking to turn your images into stickers? See how Replicate's AI tools make background removal and editing simple for clean, custom results

Learn seven methods to convert a string to an integer in Python using int(), float(), json, eval, and batch processing tools like map() and list comprehension

Discover multilingual LLMs: how they handle 100+ languages, code-switching and 10 other things you need to know.

Learn 8 effective methods to add new keys to a dictionary in Python, from square brackets and update() to setdefault(), loops, and defaultdict