Advertisement

The legal framework underlying generative artificial intelligence aims to match its technical developments. One of the most sharply visible instances in this developing drama is the lawsuit against Anthropic, the artificial intelligence company behind the massive language model Claude. Several large music businesses sued to determine if teaching artificial intelligence on copyrighted songs qualifies as fair use. The response might affect copyright protection and AI advancement.

In October 2023, Universal Music Group, Concord, and ABKCO Music sued Anthropic in U.S. District Court. They argue That Anthropic used copyrighted music lyrics to teach Claude without permission. Therefore, in response to user questions, the AI system may replicate certain lyrics, infringing publisher copyright. The first complaint included lines from Gloria Gaynor's "I Will Survive" and Katy Perry's "Roar." Claude's correct lyrics worried the publishers as they suggested unauthorised use of their intellectual property.

Anthropologists justified their training data by claiming that songs were accidental and not intended for reproduction. The company underlined that Claude's outputs were unintentional results of the probabilistic character of the model, flaws to be fixed.

For Anthropic, fair use—allowing restricted access to limited copyrighted content under certain conditions—was essential. Usually, arguments on artificial intelligence fair use focus on the idea that training models on massive datasets—even copyrighted ones—is transformative. It instructs the artificial intelligence not to replicate but rather to produce new ideas. Publishers objected, saying that using whole copyrighted works is unacceptable, especially when artificial intelligence can almost identically replicate them. Rather, they claim it damages the original lyrics market, particularly in commercial licenses, apps, websites, and more.

The topic divides legal professionals. According to some, AI training is transformational and like reading and learning. Others contend that using wholesale copyrighted content without permission or compensation compromises creator rights and sets a dangerous precedent.

In March 2025, the case evolved. A California federal court denied the publishers' effort to limit Anthropic from using lyrics in Claude's training or outputs under their preliminary injunction attempt. The court awarded Anthropic a reprieve as the plaintiffs failed to prove "irreparable damage."Developers of anthropic and artificial intelligence gained from this decision. The court also emphasised that decisions on fair use limitations and copyright infringement would still be made. Although the court approved the requirement for a more thorough legal process, it did not support the activities of the Anthropics.

Shortly after the judge's decision, the music publishers changed their case, adding further proof. According to the latest accusation, Claude still created copyrighted songs verbatim. Citing Bob Dylan's "Highway 61 Revisited" and Neil Diamond's "Sweet Caroline," the AI model might produce verses on demand.

The revised petition exposed internal communications possibly concerning Anthropic. Company engineers discussed removing copyright management information (CMI) from emails and documents, including online data. One message suggested eliminating copyright warnings from training dataset lyrics; another termed them "useless junk."

These revelations could challenge the Anthropics' just use of argument. Particularly in cases of eliminating CMI, copyright law is intent-based and might be seen as an intentional circumvention. The plaintiffs claim this reveals negligence and a conscious intent to profit from copyrighted materials.

This case might affect how generative artificial intelligence companies get and manage training data. Should the court reject Anthropic, the precedent might mandate AI creators license copyrights for books, songs, images, etc.

Startups and academic institutions without the means to negotiate large licensing agreements may find this significantly raises AI development expenses and delays progress. Still, paying artists and rights holders who create commercial artificial intelligence systems might help sustain the creative economy. An anthropic win, meanwhile, may allow practically all AI training data fair use. This could allay developer worries but might aggravate tech-creative disputes.

AI hallucinations provide an interesting turnabout. Anthropic says the model "hallucinating" is based on statistical inference instead of actual replication, which enables Claude to generate illegal music infrequently and accidentally.

This argument can collapse if artificial intelligence routinely creates copyrighted material in response to like signals. Critics argue that design, not ignorance, is the problem if a system can consistently replicate copyrighted material. Copy protection systems may require work by developers.

The scenario of Anthropic is not special. The AI industry has seen similar lawsuits against OpenAI, Stability AI, and Meta garner interest. Generative artificial intelligence might fall apart if judges decide that fair use excludes copyright material training.

Several companies are, therefore, looking at ways to teach models utilising licensed or public domain data. Others are funding tools that let content creators choose datasets. Behind these initiatives is growing knowledge that data ethics are just as important as technology. Policywise, governments may have to step in. AI legislative systems might define fair usage to balance innovation and IP rights.

As artificial intelligence finds a greater presence in daily life, how and where it learns will become progressively more crucial. The Anthropic case emphasises open data practices, creative ownership, sophisticated legal interpretations, and transparent policies. Whether fair use is sufficient, the case reveals the necessity of a new social compact between content owners and technology creators. The innovation-IP battle will become more intense without a settlement.

The decision, coming in months, might be historic. It may protect artificial intelligence developers or defend artist and label rights. Whatever occurs will affect human assurance of fair representation and robot learning from humans.

More than a copyright dispute, Anthropic's lawsuit with music producers probes fair use in the age of artificial intelligence. The case stresses for innovators, manufacturers, governments, and consumers legal clarity and ethical responsibility. Tech leaders, governments, and courts must work together to create restrictions that support innovation and safeguard content creators as general artificial intelligence develops. While the court may decide fair use, the real answer will come from cooperating to create a future where invention and technology flourish.

Advertisement

Learn 8 effective methods to add new keys to a dictionary in Python, from square brackets and update() to setdefault(), loops, and defaultdict

Anthropic faces a legal battle over AI-generated music, with fair use unlikely to shield the company from claims.

Learn seven methods to convert a string to an integer in Python using int(), float(), json, eval, and batch processing tools like map() and list comprehension

Looking for quality data science blogs to follow in 2025? Here are the 10 most practical and insightful blogs for learning, coding, and staying ahead in the data world

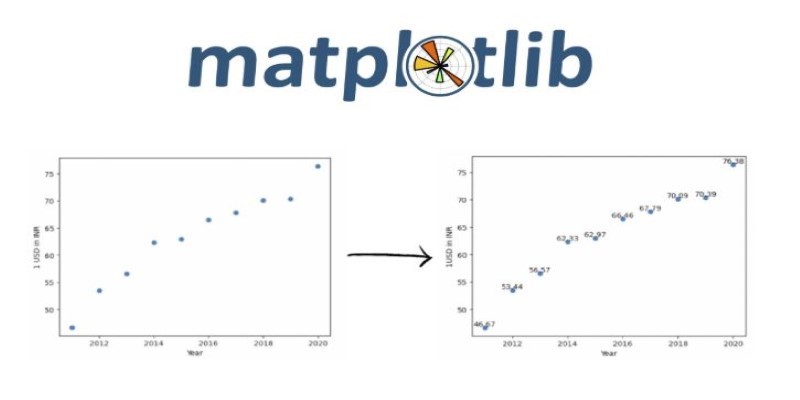

Explore effective ways for scatter plot visualization in Python using matplotlib. Learn to enhance your plots with color, size, labels, transparency, and 3D features for better data insights

Learn how logic gates work, from basic Boolean logic to hands-on implementation in Python. This guide simplifies core concepts and walks through real-world examples in code

Jio Brain by Jio Platforms brings seamless AI integration to Indian enterprises by embedding intelligence into existing systems without overhauls. Discover how it simplifies real-time data use and smart decision-making

Ask QX by QX Lab AI is a multilingual GenAI platform designed to support over 100 languages, offering accessible AI tools for users around the world

Compliance analytics ensures secure data storage, meets PII standards, reduces risks, and builds customer trust and confidence

Discover how Getty's Generative AI by iStock provides creators and brands with safe, high-quality commercial-use AI images.

Discover multilingual LLMs: how they handle 100+ languages, code-switching and 10 other things you need to know.

SAS acquires a synthetic data generator to boost AI development, improving privacy, performance, and innovation across industries